Satisfying NAS share and backup solution

Published on:A short and to the point article.

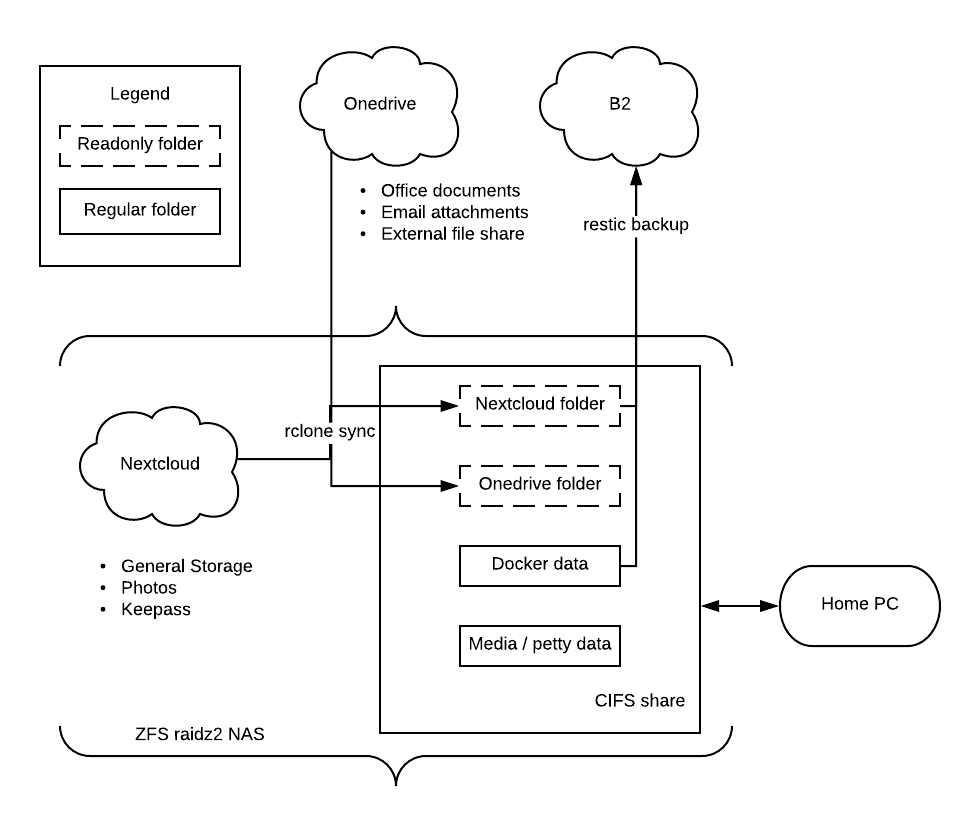

I have:

- A NAS configured with 5 disks in raidz2 with auto snapshots

- Docker applications like gitea running on the NAS

- Users who access the NAS over a CIFs share via their client of choice (Mac or Windows)

- Users who also have documents stored in cloud providers like Microsoft’s Onedrive

This setup is ok, but there are many improvements to make.

Using a cloud file hosting service requires a user to interact with the latest version of a file. This means that accidental overwrites or deletion can result in frustrating data recovery. Rclone will allow us to pull all the user’s file into a local directory on the CIFs share that is automatically snapshotted. After initial configuration, keeping the local directory in sync is as simple as:

rclone sync onedrive:/ /tank/nick/onedrive

Execute hourly or daily. We don’t need realtime syncing of files. The local copy on the NAS isn’t meant to be worked on, as any modifications won’t be replicated out to the cloud (too big of a hassle for a potential of merge conflicts). Thus we can make the network folder readonly. Below I show a service account creating a directory where only the service account has write access. The group is “nas” to allow for nas users access to the location.

sudo -u dumper mkdir --mode=u+rwx,g+rs,o-rwx -p "$location"

sudo -u dumper chown dumper.nas "$location"

Now users can view their cloud data on the network (performance boost) and rest easy knowing that their data can be recovered. Users wishing to add or modify data can still use official clients like the Onedrive client built into Windows.

Cloud file providers have a limited amount of free space (rightfully so) and those that want to store sensitive data may be rubbed the wrong way about “giving up” their data. A fix is to spin up a Nextcloud instance on the NAS and allow users to store documents there (with end-to-end encryption). Users can use the web interface or the official Nextcloud apps to interact with their files.

Nextcloud can be used as a complementary solution. Onedrive still has excellent online editing and sharing capabilities within the Microsoft ecosystem. So keep files on the cloud provider or on the network share as appropriate. You can have your cake and eat it too. We can use rclone to sync a user’s nextcloud directory to the network share as a readonly directory. It might seem odd to rclone a Nextcloud instance on the same box, but it future proofs operations in case Nextcloud is moved offsite and keeps things consistent with all file providers, self hosted or otherwise.

Since we’re self hosting data on our own servers, things are 1000x more likely to go wrong that could result in irreversible data loss (human error, theft, accident, damage, nature, etc). We need a backup solution; restic to the rescue. Restic will obscure file names, encrypt data, deduplicate, and create snapshots. The only thing you need to supply is the directory and the location of the backup. Restic works with a host of providers, so we’ll go with the cheapest: Backblaze B2. Invoking the backup is quite easy:

restic -r b2:<backblaze-id>:/repositories backup /tank/containers/gitea-app/git/repositories

While you can have restic backup everything to the root directory, I’ve decided to break apart backups logically. Such that apps and users get their own directory in case they’d like to encrypt with a different password, but it also cuts down noise when restoring a backup.

Backblaze serves as a great backup solution for docker application data. I haven’t moved to backing up databases there (that’s the next step), but so far it has been working great as a git repo backup.

It should go without saying that one should only back up important data to conserve on costs. Security camera footage, media files (home movies can be exempt!), and any data that can be treated as disposable are fine to not back up. Raidz2 zfs is be enough of a “backup”.

So what do we have:

My backup workflow

- Microsoft Onedrive (free tier):

- Store office documents, email attachments (auto saved from outlook), and any files that need to be externally shared.

- Cloned to a readonly directory on the network. This directory is zfs snapshotted to provide additional data recovery

- Users modify files on Onedrive using official clients

- Doesn’t need to be backed up to Backblaze B2 (optional)

- Nextcloud

- Provides as much storage as your NAS can handles with additional privacy and security benefits

- Cloned to a readonly directory on the network. This directory is zfs snapshotted to provide additional data recovery

- Users modify files on Nextcloud using official clients

- Backed up to Backblaze

- Network Share (CIFs):

- Has a complete view of all data (at least read only)

- zfs snapshot as backup

- Select folders are backed up to B2 (user data, some container data)

- Backblaze B2

- Cheapest object storage out there

- Everything backed up is encrypted with file names obscured

Comments

If you'd like to leave a comment, please email [email protected]