Advantages of opaque types over value objects

Published on:Most should be in agreement that the following signature is in bad taste:

type MyObject = {

price: number;

}

Is the price in dollars, cents, or is it even some other currency? What’s protecting another developer (including the future self) from footguns?

// Mixing up dollars and cents

const discountDollars = 2;

const productCents = 10000;

const product: MyObject = { price: productCents };

console.log(product.price - discountDollars);

Before diving into opaque types, let’s see how far one can get by wrapping price in a class based approach.

Value objects with classes

Let’s start simple: an immutable class that keeps money as cents.

class Money {

private readonly cents: number;

constructor(value: number, unit: "$" | "¢") {

const result = unit === "¢" ? value : value * 100;

// don't forget to round otherwise you'll have

// $40.8 looking like $40.7999999...

this.cents = Math.round(result);

}

asCents() {

return this.cents;

}

sub(rhs: Money) {

return new Money(this.cents - rhs.cents, "¢");

}

}

And rewrite our example as:

type MyObject = {

price: Money;

}

const discount = new Money(2, "$");

const price = new Money(10000, "¢");

const product: MyObject = { price };

console.log(product.price.sub(discount).asCents());

Problem: referential equality

Since the dawn of JS, developers have been wondering the best way to compare if two objects are structurally equal.

const price1 = new Money(2, "$");

const price2 = new Money(2, "$");

console.log(price == price2); // false :(

So in the spirit of the JS ecosystem, one throws a dependency at the problem, and wipes their hands of this nuisance.

import { dequal } from 'dequal';

const price1 = new Money(2, "$");

const price2 = new Money(2, "$");

console.log(dequal(price1, price2)); // true

But wait, this won’t work if one has a JS library that also uses structural equality on our Money but it doesn’t use the same implementation as above. This can be seen in TanStack Query where we might want to transform a server response into our money objects.

import { useQuery } from "@tanstack/react-query";

const cartApiKeys = /* ... */;

const cartApi = /* ... */;

useQuery({

queryKey: cartApiKeys.fetch(),

queryFn: cartApi.fetch,

select: (data) => ({

...data,

price: new Money(data.price, "¢")

})

})

Behind the scenes Tanstack Query will run code like the following:

import { replaceEqualDeep } from '@tanstack/react-query';

const price1 = new Money(2, '$');

const price2 = new Money(2, '$');

const result = replaceEqualDeep(price1, price2);

if (result === price1) {

throw new Error("nope, guessed wrong");

} else {

console.log("price1 and price2 are not equal");

}

Does this mean it’s a bug in the library or that we should supply our own equality function? I’d argue the answer to both is no, as otherwise one will be playing whack-a-mole trying to turn all referential equality comparisons into a structural equality that meets our criteria. And good luck avoiding accidental React hook executions as dependency arrays are compared with Object.is.

Now, if you want to blame JS for this, that’s ok. With Java and C# gaining pseudo-value types via records, JS is looking awfully out in the cold. Languages like Rust have wholeheartedly embraced structural equality from the start.

We can force referential equality if our Money class is interned and we hide the constructor:

const moneyIntern = new Map<number, Readonly<Money>>();

class Money {

private constructor(private readonly cents: number) {}

private static addToMap(cents: number) {

const value = Object.freeze(new Money(cents));

moneyIntern.set(cents, value);

return value;

}

static from(value: number, unit: "$" | "¢") {

const result = unit === "¢" ? value : value * 100;

const cents = Math.round(result);

return moneyIntern.get(cents) ?? Money.addToMap(cents);

}

// ...

}

We no longer have to worry about structural equality as Money objects of the same value will be the same reference:

const price1 = Money.from(2, "$");

const price2 = Money.from(2, "$");

console.log(price1 == price2); // true

Typically object pools, interners, or any other type of object cache are touted for their memory efficiency, but in this case we are more interested in their referential equality.

A new problem arises, though. Once Money is interned, it sticks around forever. Reclaiming unused interned values is a known problem. Luckily, JS allows us to plant a hook into the garbage collector so we know when the last reference of a given money amount has been deallocated and remove it from the intern pool.

const moneyIntern = new Map<number, WeakRef<Readonly<Money>>>();

const registry = new FinalizationRegistry<number>((key) => {

if (!moneyIntern.get(key)?.deref()) {

moneyIntern.delete(key);

}

});

class Money {

private constructor(private readonly cents: number) {}

private static addToMap(cents: number) {

const value = Object.freeze(new Money(cents));

moneyIntern.set(cents, new WeakRef(value));

registry.register(value, cents);

return value;

}

static from(value: number, unit: '$' | '¢') {

const result = unit === '¢' ? value : value * 100;

const cents = Math.round(result);

return moneyIntern.get(cents)?.deref() ?? Money.addToMap(cents);

}

// ...

}

As outlined on MDN, there are several caveats with this approach, so proceed with caution. Though this should be a safe usage as we are using this as a form of cleanup.

We did it, right? We created a value object without downsides, right?

Problem: serialization

Objects rarely exist in a vacuum and only in memory. There will be a time when it must be transmitted, and the data format everyone will instinctively reach for will be JSON. Fullstack React apps like Next.js and Remix will embed data used in page rendering as JSON, so a serializable data model is important.

We can serialize our value object if we are fine with exposing internals:

JSON.stringify(Money.from(2, "$"))

// {"cents":300}

I think this is dangerous as if we rename the cents field to value, we may think we are safe as it is a private field, but we have unbeknownst changed the JSON format.

The bigger problem is deserialization. JSON.parse won’t turn the cents objects into Money for us.

const req = JSON.stringify(Money.from(2, "$"));

const res/*: ??? */ = JSON.parse(req);

const result = Money.from(res.cents, "¢");

For every value type, we need to define another type for the wire format that will be manually kept in sync, and then we need to perform the transformation into the actual Money object. These two downsides will make opaque types shine.

Opaque types

Let’s look at our Money from the point of view of an opaque type.

declare const tag: unique symbol;

type Opaque<K, T> = K & { readonly [tag]: T };

// Also consider the builtin `Opaque` type in ts-fest:

// https://github.com/sindresorhus/type-fest.

type Money = Opaque<number, "Money">;

const Money = {

from(value: number, unit: '$' | '¢'): Money {

const result = unit === '¢' ? value : value * 100;

const cents = Math.round(result);

return cents as Money;

}

}

const price1 = Money.from(2, '$');

const price2 = Money.from(2, '$');

console.log(price1 == price2); // true

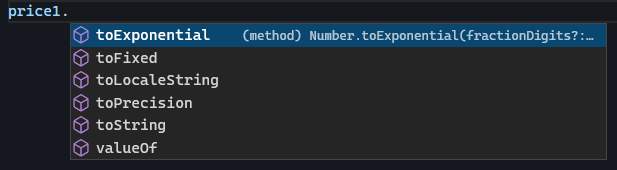

It looks pretty good, until we try to access the properties on price and see the internal type exposed via the methods available.

Price sharing that it is a number

We can paper over the exposure by popping up an intellisense suggestion to use official functions, as a hypothetical Money.format is much more suitable than trying to format an opaque number yourself.

type Opaque<K, T> = K & { readonly __USE_METHODS_ON: T };

type Money = Opaque<number, "Money">;

It’s not the perfect solution, but it’s tolerable. Decoupling the data from functions shouldn’t be too unfamiliar to JS developers, especially Redux ones as it’s documented to prefer selector functions to derive additional functionality.

Some consider the inability to assign an opaque type to a literal a downside.

const price3: Money = 2; // compilation error

I see the compilation error as a win, as one should always strive to parse data into the appropriate type.

For serialization, opaque types solve the issue as it only represents data. That means the following is valid:

const req = JSON.stringify(Money.from(2, "$"));

const res: Money = JSON.parse(req);

The cast may seem unnerving, but it’s fine for client side, unless you want to dive into the merits of client side validation of server responses and pay the cost of running zod over the data.

On the server, it should be business as usual: don’t trust anything clients send.

Optionally, we may want a more descriptive structure for our money, so that a weary-eyed developer or a sysadmin looking at logs don’t need to cross reference what the number represents.

There are a couple of options:

type MyObject2 = {

price: Opaque<{ cents: number }, "Money2">;

}

type MyObject3 = {

priceCents: Opaque<number, "Money3">;

}

My preference would be the first type, as using the unit of measurement in the field name may not be accurate in how a 3rd party would store the given field (and thus could lead to false impressions).

The first type uses an object, and since it is not a class, a wider swath of structural equalities (like the one in Tanstack Query), will be able to handle it without any code from our part.

We’re not so lucky with referential equality, and it might be tempting to leverage the interning we did earlier to get the same object, but interning only works when dealing with immutable data like we had set up with our class based approach. We can get something that is pretty close to immutable with:

type Money = Opaque<Readonly<{ cents: number }>, "Money">;

const Money = { /* ... */ }

const price1 = Money.from(2, '$');

// Fake immutability, as this will mutate cents

Object.assign(price1, { cents: 1 });

console.log(price1.cents);

Since there are fewer immutability guarantees, it shouldn’t be relied on too heavily. the class based approach used Object.freeze to ensure immutability, otherwise Object.assign could poison the interning.

Another aspect that may be inconvenient is that functions lack self awareness, which results in less readable code in my opinion.

const price4 = Money.from(3, "$");

const price5 = Money.from(2, "$");

const price6 = Money.sub(price4, price5);

// Can't do `price4.sub(price5)`

The API is a bit awkward, but seems to be gaining popularity as the Dinero.js 2 alpha switched to the same approach:

import { dinero, subtract, type Dinero } from 'dinero.js';

import { USD } from '@dinero.js/currencies';

const price = dinero({ amount: 5000, currency: USD });

const discount = dinero({ amount: 1000, currency: USD });

const salePrice = subtract(price, discount);

const data = JSON.stringify(salePrice);

const back = dinero(JSON.parse(data));

While the above shows that functions like subtract have a similar API, it also demonstrates that dinero isn’t a plain object, and needs an extra step when transporting and restoring values. It’s all about tradeoffs.

Conclusion

Value objects as classes are the most ergonomic from a pure OOP perspective. All data and functions at your fingertips for each instance will result in the most readable code once interning has been effectively set up for referential equality.

However, it is much easier to embed opaque data types in transmission, and one gets widespread compatibility in structural equality.

There are use cases for preferring one over the other, but I plan on defaulting to opaque data types in the future, as the downsides aren’t enough to deter me.

Comments

If you'd like to leave a comment, please email [email protected]